A scholarly recommendation system is an important tool for identifying prior and related resources such as literature, datasets, grants, and collaborators. A well-designed scholarly recommender significantly saves the time of researchers and can provide information that would not otherwise be considered. The usefulness of scholarly recommendations, especially literature recommendations, has been established by the widespread acceptance of web search engines such as CiteSeerX, Google Scholar, and Semantic Scholar. This article discusses different aspects and developments of scholarly recommendation systems. We searched the ACM Digital Library, DBLP, IEEE Explorer, and Scopus for publications in the domain of scholarly recommendations for literature, collaborators, reviewers, conferences and journals, datasets, and grant funding. In total, 225 publications were identified in these areas. We discuss methodologies used to develop scholarly recommender systems. Content-based filtering is the most commonly applied technique, whereas collaborative filtering is more popular among conference recommenders. The implementation of deep learning algorithms in scholarly recommendation systems is rare among the screened publications. We found fewer publications in the areas of the dataset and grant funding recommenders than in other areas. Furthermore, studies analyzing users’ feedback to improve scholarly recommendation systems are rare for recommenders. This survey provides background knowledge regarding existing research on scholarly recommenders and aids in developing future recommendation systems in this domain.

Avoid common mistakes on your manuscript.

A recommendation or recommender system is a type of information filtering system that employs data mining and analytics of user behaviors, including preferences and activities, to filter required information from a large information source. In the era of big data, recommendation systems have become important applications in our daily lives by recommending music, videos, movies, books, news, etc. In academia, there has been a substantial increase in the extent of information (literature, collaborators, conferences, datasets, and many more) available online and it has become increasingly taxing for researchers to stay up to date with relevant information. Several recommendation tools and search engines in academia (Google Scholar, ResearchGate, Semantic Scholar, and others) are available for researchers to recommend relevant publications, collaborators, funding opportunities, etc. Recommendation systems are evolving rapidly. The initial scholarly recommender system was intended for literature by recommending publications using content-based similarity methods [1]. Currently, there are several recommendation systems available for researchers and these are widely used in different scholarly areas.

In this article, we focus on different scholarly recommenders used to improve the quality of research. To the best of our knowledge, no article currently focusing on all scholarly recommendation systems together is available right now. Previous surveys on recommendation systems were conducted separately for each recommendation system. Most of these studies were based on literature or collaborator recommendation systems [2]. Currently, there is no comprehensive review that contains a description of different types of scholarly recommendation systems, particularly for academic use.

Therefore, it is necessary to provide a survey as a guide and reference to researchers interested in this area; a systematic review of scholarly recommendation system would serve this purpose. It helps to explore research achievements in scholarly recommendation, provide researchers with an overall presentation of systems for allocating academic resources, and identify improvement opportunities.

This article describes the different scholarly recommendation systems that researchers use in their daily activities. We are taking a closer look at the methodologies used for developing such systems. The research questions of our study are as follows:

To answer our first research question, we collected over 500 publications on scholarly recommenders from the ACM Digital Library, DBLP, IEEE Explorer, and Scopus. Literature and collaborator recommendation systems are the most studied recommenders in the literature, with many publications in each. Websites for searching publications host literature recommendations as a key function, almost all of which are free for researchers. However, a few collaborator recommendation systems have been implemented online; and are not free for all users. One of the reasons can be attributed to the large amount of personal information and preferences required by these recommenders.

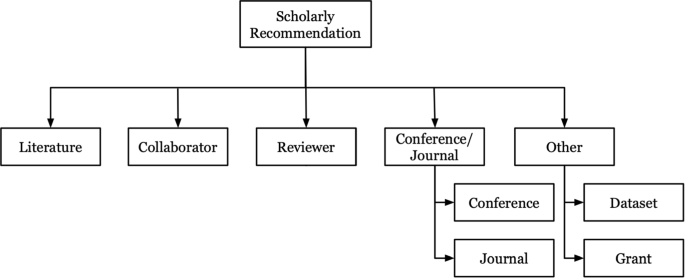

Furthermore, we studied journal and conference recommendation systems for publishing papers and articles. Although many publishing houses have implemented their own online journal recommender systems, conference recommender systems are not available online. Next, we studied reviewer recommendation problems, in which reviewers are recommended for conferences, journals, and grants. Finally, we identified datasets and grant recommendation systems, which are the least studied scholarly recommendation systems. Figure 1 shows all currently available scholarly recommendations.

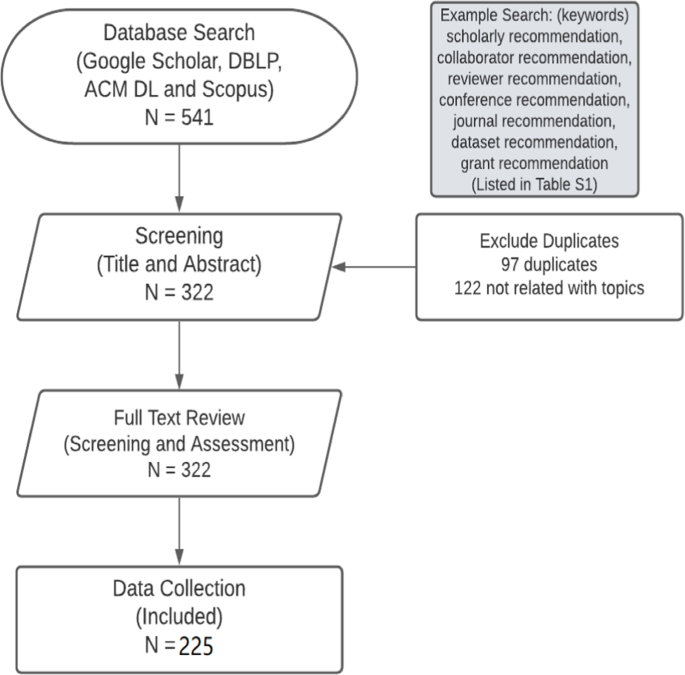

An initial literature survey was conducted to identify keywords related to individual recommendation systems that can be used to search for relevant publications. A total of 26 keywords were identified to search for relevant publications (see Supplementary 17).

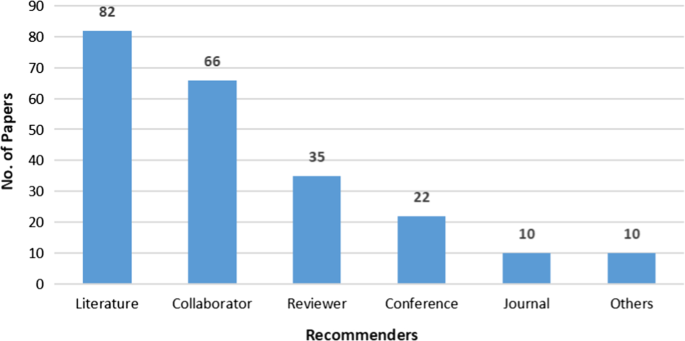

At the end of the full-text review process, 225 publications were included in this study. The number of publications on individual recommendation systems is shown in Fig. 2. To be eligible for the review, we focused on the description, evaluation, and use of natural language processing algorithms. During the full-text review process, we excluded studies that were not peer-reviewed, such as abstracts and commentary, perspective, or opinion pieces. Finally, we performed data extraction and analysis on 225 articles and summarized their data, methodology, evaluation metrics, and detailed categorization in the following sections. The PRISMA flowchart for our publication collection is shown in Fig. 3; with example search keywords.

The remainder of this paper is organized as follows. Section 2 describes different literature recommendation systems based on their methodologies and corresponding datasets. Section 3 describes different approaches for developing collaborator recommendation systems. Section 4 reviews the journal and conference venue recommendation systems. Section 5 describes the reviewer’s recommendation system. In Sect. 6, we review all other scholarly recommendation systems available in the literature such as datasets and grant recommendation systems. Finally, Sect. 7 discusses future work and concludes the article.

Literature recommendation is one of the most well-studied scholarly recommendation problems with several research articles published in the past decade. Recommender systems for scholarly literature have been widely used by researchers to locate papers, keep up with their research fields, and find relevant citations for drafts. To summarize the literature recommendation systems, we collected 82 publications for scholarly papers and citations.

The first research paper recommendation system was introduced as a part of the CiteSeer project [1]. In total, 11 out of 82 publications (approximately 13%) used applications or methodologies based on a citation recommendation system. As one of the widest subsets of scholarly literature recommendation, citation recommendation aims to recommend citations to researchers while authoring a paper and finding work related to their ideas. It recommends citations based on the content of the researchers’ work. Among the 11 citation recommender papers, content-based filtering (CBF) methodologies have been widely used on the fragments of the citations for the recommendation, and some of them applied collaborative filtering (CF) to develop a potential citation recommendation system based on users’ research interests and citation networks [3].

In this section, we describe the datasets used to develop literature recommendation systems. A total of 75 reviewed publications evaluated the methodologies using different datasets. The authors of 45 publications chose to construct their own datasets based on manually collected information or paid datasets that were rarely used. Several open-source published datasets are commonly used to develop literature recommendations.

Owing to the rapid development of modern websites for literature search, datasets for literature recommendation are readily available. There were 28 publications that used public databases for the testing and evaluation of the methods. The sources of these datasets are listed in Table 1. These websites collected publications from several scientific publishers and indexed them with their references and keywords. Using the information extracted from these public resources, researchers created datasets to perform recommendation methodologies and obtain the ground truth for offline evaluation.

Currently, research in any area has expanded exponentially beyond its own fields to other research fields in the form of collaborative research. Collaboration is essential in academia to obtain good publications and grants. Identifying and determining a potential collaborator is challenging. Hence, a recommendation system for collaboration would be very helpful. Fortunately, many publications on recommending collaborators are available.

A total of 59 publications were identified using databases to develop, test and evaluate recommender systems. In 20 publications, the authors constructed their own datasets based on manually collected information, unique social platforms, or paid databases that are rarely used. In 39 out of the 59 publications, the authors used open-source databases. Of these 39 publications, 17 used data from the DBLP library to evaluate the developed collaborator recommendation systems.

The datasets needed for developing collaborator recommendations usually include 2 major subjects: (1) contexts and keywords based on researchers’ information; and (2) information networks based on academic relationships. Owing to the rapid development of online libraries and academic social networks, the extraction of information networks has become available. These datasets extracted relative information from different online sources and collected information to (i) construct profiles for researchers, (ii) retrieve keywords for constructing a structure, for specific domains and concepts, and (iii) extract weighted co-author graphs. In addition, data mining and social network analysis tools may also be used for clustering analysis and for identifying representatives of expert communities. The sources of datasets used in the 59 publications are listed in Table 5.

Table 5 Sources of datasets used for collaborator recommendation approachesAmong the reviewed studies, most researchers extracted information from these databases to construct training and evaluation datasets for their recommendations.

The DBLP dataset was used in 17 publications to evaluate the performance of the collaborator recommendation approaches. The DBLP computer science bibliography provides an open bibliographic list of information on major computer science fields and is widely used to construct co-authorship networks. In the co-authorship network graphs of DBLP bibliography, the nodes represent computer scientists and the edges represent a co-authorship incident.

ScholarMate, a social research management tool launched in 2007 was used in 4 publications. It has more than 70,000 research groups created by researchers for their own projects, collaboration, and communication. As a platform for presenting publication research outputs, ScholarMate automatically collects scholarly related information about researchers’ output from multiple online resources. These resources include multiple online databases such as Scopus, one of the largest abstract and citation databases for peer-reviewed literature, including scientific journals, books, and conference proceedings. ScholarMate uses aggregated data to provide researchers with recommendations on relevant opportunities based on their profiles.

Similar to other scholarly recommendation areas, research on methodologies to develop collaborator recommendations can be classified into the following categories: CBF, CF, and hybrid approaches. In this section, we introduce the approaches that are widely used in each recommendation class. In addition, we provide an overview of the most important aspects and techniques used in these fields.

23 publications presented CBF methods for collaborator recommendation. CBF focuses on the semantic similarity between researchers’ personal features, such as their personal profiles, professional fields, and research interests. Natural language processing techniques (NLP) were used to extract keywords from the associated documents to define researchers’ professional fields and interests. A summary of publications on collaborator recommendation using CBF approaches is presented in Table 6.

Table 6 Overview of collaborator recommendation system using CBFThe Vector Space Model (VSM) is widely used in content-based recommendation methodologies. By expressing queries and documents as vectors in a multidimensional space, these vectors can be used to calculate the relevance or similarity. Yukawa et al. [84] proposed an expert recommendation system employing an extended vector space model that calculates document vectors for every target document for authors or organizations. It provides a list in the order of relevance between academic topics and researchers.

Topic clustering models using VSM have been widely used to profile fields of researchers using a list of keywords with a weighting schema. Using a keyword weighting model, Afzal and Maurer [85] implemented an automated approach for measuring expertise profiles in academia that incorporates multiple metrics for measuring the overall expertise level. Gollapalli et al. [86] proposed a scholarly content-based recommendation system by computing the similarity between researchers based on their personal profiles extracted from their publications and academic homepages.

Topic-based models have also been widely applied for document processing. The topic-based model introduces a topic layer between the researchers and extracted documents. For example, in a popular topic modeling approach, based on the latent Dirichlet allocation (LDA) method, each document is considered as a mixture of topics and each word in a document is considered randomly drawn from the document’s topics. Yang et al. [87] proposed a complementary collaborator recommendation approach to retrieve experts for research collaboration using an enhanced heuristic greedy algorithm with symmetric Kullback–Leibler divergence based on a probabilistic topic model. Kong et al. [88] applied a collaborator recommendation system by generating a recommendation list based on scholar vectors learned from researchers’ research interests extracted from documents based on topic modeling.

As mentioned previously in the literature recommendation section, content-based methods usually suffer from a high calculation cost because of the large number of analyzed documents and vector space. To minimize this cost and maximize the preference, Kong et al. [100] presented a scholarly collaborator recommendation method based on matching theory, which adopts multiple indicators extracted from associated documents to integrate the preference matrix among researchers. Some researchers have also modified weighted features and hybrid topic extraction methods with other factors to obtain higher accuracy. For example, Sun et al. [92] designed a career age-aware academic collaborator recommendation model consisting of authorship extraction from digital libraries, topic extraction based on published abstractions, and career age-aware random walk for measuring scholar similarity.

Six publications presented a methodology based merely on collaborative filtering. Traditional CF-based recommendations aim to find the nearest neighbor in a social context similar to that of the targeted user. It selects the nearest neighbors based on the users’ rating similarities. When the users rate a set of items in a manner similar to that of a target user, the recommendation systems would define these nearest neighbors as groups with similar interests and recommend items that are favored by these groups but not discovered by the target user. To apply this method to collaborator recommendation, the system would recommend persons who have worked with a target author’s colleagues but not with the target author himself. Analogously, the system considers each author as an item to be rated and the scholarly activities such as writing a paper together as a rating activity, following the methodology of traditional CF-based recommendations. Researchers’ publication activities are transformed into rating actions, and the frequency of co-authored papers is considered a rating value. Using this criterion, a graph based on a scholarly social network was built. A summary of the collaborator recommendation paper using CF approaches is presented in Table 7.

Table 7 Overview of collaborator recommendation system using collaborative filteringBased on this co-authorship network transformed from researchers’ publication activities, several methods for link prediction and edge weighting have been utilized. Benchettara et al. [108] solved the problem of link prediction in co-authoring networks by using a topological dyadic supervised machine learning approach. Koh and Dobbie [110] proposed an academic collaborator recommendation approach that uses a co-authorship network with a weighted association rule approach using a weighting mechanism called sociability. Recommendation approaches based on this co-authorship network transformed from publication activities, where all nodes have the same functions, are called homogeneous network-based recommendation approaches.

The random walk model, which can define and measure the confidence of a recommendation, is popular in co-authorship network-based collaborator recommendations. Tong et al. [113] published Random Walk with Restart (RWR), a famous random walk model, which provides a good way to measure how closely related two nodes are in a graph. Applications and improvements based on RWR model are widely used for link prediction in co-authorship networks. Li et al. [109] proposed a collaboration recommendation approach based on a random walk model using three academic metrics as the basics through co-authorship relationship in a scholarly social network. Yang et al. [112] combined the RWR model with the PageRank method to propose a nearest-neighbor-based random walk algorithm for recommending collaborators.

Compared with content-based recommendation approaches, which involve only the published profiles of researchers without considering scholarly social networks, homogeneous network-based approaches apply CF methods based on social network technology to recommend collaborators. Lee et al. [111] compared ASN-based collaborator recommendations with metadata-based and hybrid recommendation methodologies, and suggested it as the best method. However, homogeneous network-based collaboration recommendations do not consider the contextual features of researchers. As a combination of these two methods, a hybrid collaboration recommendation system based on a heterogeneous network is popular in current collaboration recommendation approaches and applications.

Approaches to previously introduced recommendation classes may be combined with hybrid approaches. 37 of the reviewed papers applied approaches with hybrid characteristics. As an improvement, heterogeneous network-based recommendations overcome these limitations. Table 8 summarizes all collaborator recommendation papers that we collected using hybrid approaches.

Heterogeneous networks are networks in which two or more node classes are categorized by their functions. Based on the co-authorship network used in most homogeneous network-based approaches, heterogeneous network-based approaches incorporate more information into the network, such as the profiles of researchers, the results of topic modeling or clustering, and the citation relationship between researchers and their published papers. Xia et al. [52] presented MVCWalker, an innovative method based on RWR for recommending collaborators to academic researchers. Based on academic social networks, other factors such as co-author order, latest collaboration time, and times of collaboration were used to define link importance. Kong et al. [114] proposed a collaboration recommendation model that combines the features extracted from researchers’ publications using a topic clustering model and a scholar collaboration network using the RWR model to improve the recommendation quality. Kong et al. [115] proposed a collaboration recommendation model that considers scholars’ dynamic research interests and collaborators’ academic levels. By using the LDA model for topic clustering and fitting the dynamic transformation of interest, they combined the similarity and weighting factors in a co-authorship network to recommend collaborators with high prevalence. Xu et al. [116] designed a recommendation system to provide serendipitous scholarly collaborators that could learn the serendipity-biased vector representation of each node in the co-authorship network.

Table 8 Overview of collaborator recommendation system using hybrid methodsIn this section, we describe recommendation systems that can help researchers identify scientific research publishing opportunities. Recently, there has been an exponential increase in the number of journals and conferences researchers can select to submit their research. Recommendation systems can alleviate some of the cognitive burden that arises when choosing the right conference or journal for publishing a work. In the following sections, we describe academic venue recommendation systems for conferences and journals.

The dramatic rise in the number of conferences/journals has made it nearly impossible for researchers to keep track of academic conferences. While there is an argument to be made that researchers are familiar with the top conferences in their field, publishing to those conferences is also becoming increasingly difficult due to the increasing number of submissions. A conference recommendation system will be helpful in reducing the time and complexity requirement to find a conference that meets the needs of a given researcher. Thus, conference recommendation is a well-studied problem in the domain of data analysis, with many studies being conducted using a variety of methods such as citation analysis, social networks, and contextual information.

Table 9 Sources of data used for Conference Recommendation SystemsAll reviewed publications used databases to test their methodology. Two publications chose to construct a custom dataset based on the manual collection of information and one publication used a rare paid dataset. The remaining 20 studies used published open-source databases to create the datasets used in their testing and evaluation environments. Table 9 provides a summary of the frequencies with which published open-source databases were used.

DBLP was the most used database with 12 occurrences, followed by ACM Digital Library and WikiCFP, both with 5 occurrences. The unique databases utilized in conference recommendation systems are Microsoft Academic Search, CORE Conference Portal, Epinion, IEEE Digital Library, and Scigraph.

Microsoft Academic Search hosts over 27 million publications from over 16 million authors and is primarily used to extract metadata on authors, their publications, and their co-authors. The CORE Conference portal provides rankings for conferences primarily in Computer Science and related disciplines. The CORE Conference provides metadata on conference publishers and rankings. The Epinion is a general review website founded in 1999 and utilized to create networks of ‘trusted’ users. The IEEE Digital Library is a database used to access journal articles, conference proceedings, and other publications in computer science, electrical engineering, and electronics. A scigraph is a knowledge graph aggregating metadata from publications in Springer Nature and other sources. WikiCFP is a website that collates and publishes calls for papers.

There are three main subtypes of conference recommendation systems: content-based, collaborative, and hybrid systems. The following section provides an overview of the most popular methods used by each sub types.

Content-based filtering (CBF)

Only 1 of the 23 publications in conference recommendations utilized pure CBF. Using data from Microsoft Academic Search, Medvet et al. [146] created three disparate CBF systems seeking to reduce the input data required for accurate recommendations: (a) utilizing Cavnar-Trenkle text classification, (b) utilizing two-step latent Dirichlet allocation (LDA), and (c) utilizing LDA alongside topic clustering.

Cavnar-Trenkle classification is an n-gram-based text classification method. Given a set of conferences \(C = \\) , it is necessary to define for each conference \(c \in C\) a set of papers \(P = \\) that were published in conference \(c\) . It creates an n-gram profile for each conference \(c \in C\) , using n-grams generated from each paper in the conference \(p \in P\) . Finally, it computes the distance between the n-gram profiles of each conference \(c \in C\) and a publication of interest \(p_i\) and recommends an \(n\) number of conferences that optimize the minimum distance between \(c\) and \(p_i\) .

Collaborative filtering

Among 18 publications employed collaborative filtering strategies out of the 23 collected publications, the most popular filtering approach was based on around generating and analyzing a variety of networks on different types of metadata including citations, co-authorship, references, social proximity, etc.

Asabere and Acakpovi [147, 148] generated a user-based social context aware filter with breadth-first search (BFS) and depth-first search (DFS) on a knowledge graph created by computing the Social Ties between users, and added geographical, computing, social, and time contexts. Social Ties were generated by computing the network centrality based on the number of links between users and presenters at a given conference.

Other types of network-based collaborative filters include a co-author-based network that assigns weights with regard to venues where one’s collaborators have published previously [149, 150], a broader metadata-based network that utilizes one or more distinct characteristics to assign weights to conferences (i.e., citations, co-authors, co-activity, co-interests, colleagues, interests, location, references, etc.) [146, 151,152,153,154], and RWR-based methods [155, 156].

Kucuktunc et al. [155] iterated the traditional RWR model by adding a directionality parameter \((\kappa )\) , which is used to chronologically calibrate the recommendations as either recent or traditional. The list of publications that used CF for conference recommendations is presented in Table 10.

Table 10 Overview of conference recommendation systems using collaborative filteringA total of 6 publications used hybrid filtering strategies out of the total 23 publications. The most common hybrid strategy i to amalgamate standard topic-based content filtering with network-based collaborative filters. Table 11 summarizes publications that used hybrid filtering methods for conference recommendations.

Table 11 Overview of conference recommendation systems using hybrid filteringAs of April 14, 2020, the Master Journal List of the Web of Science Group contains 24,748 peer-reviewed journals for publishing articles from different publishing houses. The authors may face difficulties in finding suitable journals for their manuscripts. In many cases, a manuscript submitted to a journal is rejected because it is not within the scope of that journal. Finding suitable journals for a manuscript is the most important step in publishing articles. A journal recommendation system may reduce the burden of authors by selecting appropriate journals to publish as well as reducing the burden of editors from rejecting manuscripts that do not align with the scopes of the journals. Many publishing companies have their own journal finders that can help authors find suitable journals for their manuscripts.

In this section, we review all available journal recommendation systems by analyzing the methods used and their journal coverage. There are a total of ten journal recommendation systems, but we found only four papers describing details corresponding to their recommendation procedures. A detailed list of journal recommenders with their methods and datasets is provided in Table 12. Most journal recommenders were developed for different publishing houses. Most journal recommenders contain journals from multiple domains except eTBLAST, Jane, and SJFinder, where the journals are from the biomedical and life science domains.

Table 12 Detailed overview of journal recommendation systemsTF-IDF, kNN, and BM25 were used to find similar journals using the keywords provided keywords. Kang et al. [172] used a classification model (using kNN and SVM) to identify the suitable journals. Errami et al. [169] used the similarity between provided keywords and journal keywords.

Rollins et al. [39] evaluated a journal recommender by using feedback from real users. Kang et al. [172] evaluated a system based on previously published articles. If the top three or top ten recommended journals contained the journal in which the input paper was published, then this would be counted as a correct recommendation; otherwise, it would be counted as a false recommendation. Similarly, eTBLAST [169] and Jane [170] were evaluated using previously published articles.

Deep learning-based recommenders perform better than traditional matching-based NLP or machine learning algorithms. However, none of the existing systems available for journal recommendations uses deep learning algorithms. One of the future goals may be the implementation of different deep learning algorithms. In addition to these publication houses, developing journal recommenders for different publication repositories (DBLP, arxiv, etc.) may be another future task.

In this section, we describe paper, journal, and grant reviewer recommendation systems that rae available in literature. With the rapid increase in publishable research materials, pressure to find reviewers is overwhelming for conference organizers/journal editors. Similarly, it overwhelms program directors in finding appropriate reviewers for grants.

In the case of conferences, authors normally choose some research fields during the submission. The organizing committee of a conference typically has a set of researchers as reviewers who have been assigned from the same set of fields. Based on the matching of the fields, the reviewers were assigned papers. However, the research fields are broad and may not exactly match those of the reviewer. In the case of journals, authors need to suggest that reviewers or editors need to find reviewers for manuscript reviewing. Whereas, for reviewing grant proposals, program directors are responsible for finding suitable reviewers for reviewing proposals.

The problem of finding reviewers can be solved by a reviewer recommendation system, which the system can recommend reviewers based on the similarity of contents or past experiences. The reviewer recommendation problem is known as the reviewer assignment problem. We searched for publications related to both reviewer recommendations and assignments.

A total of 67 reviewed publications were retrieved using Google searches, and 36 publications were included in the final analysis after title, abstract, and full-text screening. Among these 36 publications, 23 conducted experiments to supplement the theoretical contents, and the sources of the datasets used are listed in Table 13.

Table 13 Sources of datasets used for reviewer recommendationBroadly, there are three major categories in terms of techniques used, one is based on information retrieval (IR), another one on optimization where the recommendation is viewed as an enhanced version of the generalized assignment problem (GAP), and the third includes techniques that fall between the first two categories.

IR-based studies generally focus on calculating matching degrees between reviewers and submissions.

Hettich and Pazzani [178] discussed a prototype application in the U.S. National Science Foundation (NSF) to assist program directors in identifying reviewers for proposals, named Revaid, which uses TF-IDF vectors for calculating proposal topics and reviewer expertise, and defined a measure called the Sum of Residual Term Weight (SRTW) for the assignment of reviewers. Yang et al. [179] constructed a knowledge base of expert domains extracted from the web and used a probability model for domain classification to compute the relatedness between experts and proposals for ranking expertise. Ferilli et al. [180] used Latent Semantic Indexing (LSI) to extract the paper topic and expertise of reviewers from publications available online, followed by Global Review Assignment Processing Engine (GRAPE), a rule-based expert system for the actual assignment of reviewers.

Serdyukov et al. [181] formulated a search for an expert to absorb a random walk in a document-candidate graph. A recommendation was made on reviewer candidate nodes with high probabilities after an infinite number of transitions in the graph, with the assumption that expertise is proportional to probability. Yunhong et al. [182] used LDA for proposal and expertise topic extraction, and defined a weighted sum of varied index scores for ranking reviewers for each proposal. Peng et al. [183] built a time-aware reviewer’s personal profile using LDA to represent the expertise of reviewers, then a weighted average of matching degree by topic vectors and TF-IDF of the reviewer and submitted papers were used for recommendation. Medakene et al. [184] used pedagogical expertise in addition to the research expertise of the reviewers with LDA in building reviewers’ profiles and used a weighted sum of the topic similarity and the reference similarity for assigning reviewers to papers. Rosen-Zvi et al. [185] proposed an Author-Topic Model (ATM) that extends the LDA to include authorship information. Later, Jin et al. [186] proposed an Author-Subject-Topic (AST) model, with the addition of a ‘subject’ layer that supervises the generation of hierarchical topics and sharing of subjects among authors for reviewer recommendations. Alkazemi [187] developed PRATO (Proposals Reviewers Automated Taxonomy-based Organization) that first sorted proposals and reviewers into categorized tracks as defined by a tree of hierarchical research domains, and then assigned the reviewers based on the matching of tracks using Jaccard similarity scores. Cagliero et al. [188] proposed an association rule-based methodology (Weighted Association Rules, WAR) to recommend additional external reviewers.

Ishag et al. [189] modeled citation data of published papers as a heterogeneous academic network, integrating authors’ h-index and papers’ citation counts, proposed a quantification to account for author diversity, and formulated two types of target patterns, namely, researcher-general topic patterns (RSP) and researcher-specific topic patterns (RSP) for searching reviewers.

Recently deep learning techniques have been incorporated into feature representations. Zhao et al. [190] used word embeddings to represent the contents of both the papers and reviewers. Then, the Word Mover’s distance (WMD) method was used to measure the minimum distances between paper and reviewer vectors. Finally, the Constructive Covering Algorithm (CCA) was used to classify reviewer labels for recommending reviewers. Anjum et al. [191] proposed a common topic model (PaRe) that jointly models topics to a submission and a reviewer profile based on word embedding. Zhang et al. [192] proposed a two-level bidirectional gated recurrent unit with an attention mechanism (Hiepar-MLC) to represent the semantic information of reviewers and papers and used a simple multilabel-based reviewer assignment strategy (MLBRA) to match the most similar multilabeled reviewer to a particular multilabeled paper.

Co-authorship and reviewer preferences were incorporated into collaborative filtering application. Li and Watanabe [193] designed a scale-free network combining preferences and a topic-based approach that considers both reviewer preferences and the relevance of reviewers and submitted papers to measure the final matching degrees between reviewers and submitted papers. Xu and Du [194] designed a three-layer network that combines a social network, semantic concept analysis and citation analysis, and proposed a particle swarm algorithm to recommend reviewers for submissions. Maleszka et al. [195] used a modular approach to determine a grouping of reviewers that consisted of a keyword-based module, a social graph module and a linguistic module. A summary of all IR-based reviewer recommendations can be found in Table 14.

Table 14 Overview of reviewer recommendation systems, IR-basedOptimization-based reviewer recommendations focus more on theory, modeling an algorithm of assignments under multiple constraints such as reviewer workload, authority, diversity, and conflict of interest (COI).

Sun et al. [196] proposed a hybrid of knowledge and decision models to solve the proposal-reviewer assignment problem under constraints. Kolasa and Krol [197] compared artificial intelligence methods for reviewer-paper assignment problems, namely, genetic algorithms (GA), ant colony optimization (ACO), tabu search (TS), hybrid ACO-GA and GA-TS, in terms of time efficiency and accuracy. Chen et al. [198] employed a two-stage genetic algorithm to solve the project-reviewer assignment problem. In the first stage, reviewer were assigned by taking into consideration their respective preferences, and then, in the second stage, review venues were arranged in a way that allows the minimum times of change for reviewers.

Das and Gocken [199] used fuzzy linear programming to solve the reviewer assignment problem by maximizing the matching degree between expert sets and grouped proposals, under crisp constraints. Tayal et al. [200] used type-2 fuzzy sets to represent reviewers’ expertise in different domains, and proposed using the fuzzy equality operator to calculate equality between the set representing the expertise levels of a reviewer and the set representing the keywords of a submitted proposal, and optimized the assignment under various constraints.

Wang et al. [201] formulated the problem into a multiobjective mixed integer programming model that considers Direct Matching Score (DMS) between manuscripts and reviewer, Manuscript Diversity (MD), and Reviewer Diversity (RD), and proposed a two-phased stochastic-biased greedy algorithm (TPGA) to solve the problem. Long et al. [202] studied the paper-reviewer assignment problem from the perspective of goodness and fairness, where they proposed maximizing topic coverage and avoiding the conflict of interest (COI) for the optimization objectives. They also designed an approximation method that provides 1/3 approximation.

Kou et al. [203] modeled reviewers’ published papers as a set of topics and performed weighted-coverage group-based assignments of reviewers to papers. They also proposed a greedy algorithm that achieves a 1/2 approximation ratio compared with the exact solution. Kou et al. [204] developed a system that automatically extracts the profiles of reviewers and submissions in the form of topic vectors using the author-topic model (ATM) and assigns reviewers to papers based on the weighted coverage of paper topics.

Stelmakh et al. [205] designed an algorithm, PeerReview4All, which is based on an incremental max-flow procedure to maximize the review quality of the most disadvantaged papers (fairness objective) and to ensure the correct recovery of the papers that should be accepted (accuracy objective). Yesilcimen and Yildirim [206] proposed an alternative mixed integer programming formulation for the reviewer assignment problem whose size grows polynomially as a function of the input size. A summary of all the optimization-based reviewer recommendation papers is presented in Table 15.

Table 15 Overview of reviewer recommendation systems, optimization-basedFinally, we see hybrid of both methods in other studies. Conry et al. [207] modeled reviewer-paper preferences using CF of ratings, latent factors, paper-to-paper content similarity, and reviewer-to-reviewer content similarity and optimized the paper assignment under global conference constraints; therefore, the assignment was transformedinto a linear programming problem. Tang et al. [208] formulated the problem of expertise matching to a convex cost flow problem which turned the recommendation into an optimization problem under constraints, and also used online matching algorithms to support user feedback to the system.

As one of the most popular systems for conference reviewer assignment, Charlin and Zemel [209] addressed the assignment by first using a language model and LDA for learning reviewer expertise and submission topics, followed by a linear regression for initial predictions of reviewers’ preferences, combined with reviewers’ elicitation scores (reviewers’ disinterest or interests) in specific papers for the final recommendation, and optimized the objective functions under constraints. Liu et al. [210] constructed a graph network for reviewers and query papers using LDA to establish edge weights, and used the Random Walk with Restart (RWR) model on a graph network with sparsity constraints to recommend reviewers with the highest probabilities incorporating aspects of expertise, authority and diversity. Liu et al. [211] combined the heuristic knowledge of expert assignment and techniques of operations research, in which different aspects are involved, such as reviewer expertise, title and project experience. A multiobjective optimization problem was formulated to maximize the total expertise level of the recommended experts and avoid conflicts between reviewers and authors. Ogunleye et al. [212] used a mixture of TF-IDF, LSI, LDA and word2vec to represent the semantic similarity between submissions and reviewers’ publications and then used integer linear programming to match submissions with the most appropriate reviewers. Jin et al. [213] extracted topic distributions of reviewers’ publications and submissions using the Author-Topic Model (ATM) and Expectation Maximization (EM), then formulated the problem of reviewer assignment into an integer linear programming problem that takes into consideration the topic relevance, interest trend of a reviewer candidate, and authority of candidates. A summary of the reviewer recommendation papers is presented in Table 16.

Table 16 Detailed overview of reviewer recommendation systems, otherIn the Big Data era, extensive data have been generated for scientific discoveries. However, storing, accessing, analyzing, and sharing a vast amount of data is becoming a major challenge and bottleneck for scientific research. Furthermore, making a large amount of public scientific data findable, accessible, interoperable, and reusable (FAIR) is challenging. Many repositories and knowledge bases have been established to facilitate data-sharing. Most of these repositories are domain-specific, and none of them recommend datasets to researchers or users. Furthermore, over the past two decades, there has been an exponential increase in the number of datasets added to these dataset repositories. Researchers must visit each repository to find suitable datasets for their research. In this case, a dataset recommender would be helpful to researchers. This can save time and the visibility of the dataset.

A dataset recommender is not commonly used. However, dataset retrieval is a popular information retrieval task. Many dataset retrieval systems exist for general datasets as well as biomedical datasets. Google’s Dataset Search Footnote 2 is a popular search engine for datasets from different domains. DataMed Footnote 3 is another dataset search engine specific to biomedical domain datasets that combines biomedical repositories and enhances query searching using advanced natural language processing (NLP) techniques [214, 215]. DataMed indexes and provides the functionality to search diverse categories of biomedical datasets [215]. The research focus of DataMed is to retrieve datasets using a focused query. Search engines such as DataMed or Google Dataset Search are helpful when the user knows the type of dataset to search for, but determining the user intent of web searches is a difficult problem because of the sparse data available concerning the searcher [216].

A few experiments have been performed on data linking where similar datasets can be clustered together using different semantic features. Data linking or identifying/clustering similar datasets has received relatively less attention in research on recommendation systems. Specifically, only a few papers [217,218,219] have been published on this topic. Ellefi et al. [218] defined dataset recommendation as the problem of computing a rank score for each set of target datasets ( \(D_T\) ) such that the rank score indicates the relatedness of \(D_T\) to a given source dataset ( \(D_S\) ). The rank scores provide information on the likelihood of a \(D_T\) containing linking candidates for \(D_S\) . Similarly, Srivastava [219] proposed a dataset recommendation system by first creating similarity-based dataset networks, and then recommending connected datasets to users for each searched dataset. This recommendation approach is difficult to implement because of the cold start problem. Here, the cold start problem refers to the user’s initial dataset selection, where the user has no idea what dataset to select/search for. If the user lands on an incorrect dataset, the system will always recommend the wrong dataset to the user.

Patra et al. [220, 221] and Zhu et al. [222] proposed a dataset recommendation system for the Gene Expression Omnibus (GEO) based on the publications of researchers. This system recommends GEO datasets using classification and similarity-based approaches. Initially, they identified the research areas from the publications of researchers using the Dirichlet Process Mixture Model (DPMM) and recommended datasets for each cluster. The classification-based approach uses several machine and deep learning algorithms, whereas the similarity-based approach uses cosine similarity between publications and datasets. This is the first study on dataset recommendations.

Obtaining grants or funding for research is essential in academic settings. Grants help researchers in many ways during their careers. Finding appropriate funding opportunities is an important step in this process, and there are multiple grant opportunities available that a researcher may not be aware of. No universal repositories available for funding announcements worldwide. However, few repositories are available for funding announcements in the United States of America, such as, grants.gov, NIH, and SPIN. These websites host many funding opportunities in various areas. Furthermore, multiple new opportunities are available daily. Thus, it is difficult to find suitable opportunities for researchers. A recommendation system for funding announcements will help researchers find appropriate research funding opportunities. Recently, Zhu et al. [223] developed a grant recommendation system for NIH grants based on researchers’ publications. They developed the recommendation as a classification using Bidirectional Encoder Representations from Transformers (BERT) to capture intrinsic, nonlinear relationships between researchers’ publications and grant announcements. Internal and external evaluations were performed to assess the usefulness of the system. Two publications are available on developing a search engine to find Japanese research announcements [224, 225]. The titles of these papers suggest recommendation systems; however, the full text reveals that these publications describe the search for funding announcements in Japan. These publications describe a keyword-based search engine using TF-IDF and association rules.

Numerous recommendation systems have been developed since the beginning of the twenty-first century. In this comprehensive survey, we discussed all common types of scholarly recommendation systems outlining the data resources, applied methodologies and evaluation metrics.

Recommendation systems for the literature are still the most focused areas for scholarly recommendations. With the increasing need to collaborate with other researchers and publish research results, recommenders for collaborators and reviewers are becoming popular. Compared with these popular research targets, published recommendation systems for conferences/journals, datasets and grants are relatively less common.

To develop recommendation systems and evaluate their results, researchers commonly construct datasets using information extracted from multiple resources. Published open-source databases, such as DBLP, ACM and IEEE Digital Libraries, are the most commonly used sources for multiple types of recommendation systems. Some web services containing scholarly related information about its users, or social tags added by researchers, such as, ScholarMate and CiteULike, were also used to develop recommendation systems.

Content-based filtering (CBF) is the most commonly used approach for recommendation systems. Owing to the requirement of processing context information, measuring keywords and searching topics of academic resources, most recommendation systems were built based on CBF. It is difficult to consider the popularity and rating of objects in traditional CBF. To overcome these limitations, CF has been used to solve the problem, especially when recommending items based on researchers’ interests and profiles. With the rapid development of recommendation systems and the need to overcome the high calculation costs, hybrid methods combining CBF and CF have been used by several recommenders to achieve better performance.

Based on the information gathered for the survey, we provide the following suggestions for better recommendation developments:

Based on extensive research, our literature review provides a comprehensive summary of scholarly recommendation systems from various perspectives. For researchers interested in developing future recommendation systems, this would be an efficient overview and guide.

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

Zhang, Z., Patra, B.G., Yaseen, A. et al. Scholarly recommendation systems: a literature survey. Knowl Inf Syst 65, 4433–4478 (2023). https://doi.org/10.1007/s10115-023-01901-x

Anyone you share the following link with will be able to read this content:

Get shareable link

Sorry, a shareable link is not currently available for this article.

Copy to clipboard

Provided by the Springer Nature SharedIt content-sharing initiative